Linear Models

Linear ModelsLearning ObjectivesIntroduction to Linear ModelsThe method of least squaresRegarding optimality of Least squared estimatorsConfidence intervals and hypothesis testing related to regression modelsConfidence intervals for the regression parametersA confidence interval for the average response value at A prediction interval for a new response value at Hypothesis tests for the regression parametersSimulated example

Learning Objectives

Understand how to find the best fitting line between the explanatory variable and the dependent variable.

Know how to make confidence intervals for each regression coefficient and regression line.

Know how to perform a hypothesis testing for each regression coefficient and regression line.

Understand how to analyze real data using the simple and multiple regression models.

Know how to interpret computer outcomes from R.

Introduction to Linear Models

Often, we are interested in understanding the relationship between one variable, called the dependent variable or response, on a number of other variables, called the independent variables or predictors. The dependent variable is typically denoted by , and the independent variables are denoted as .

Example: Suppose we are interested in predicting a final score of stat 415 class based on a number of predictors.

Response: 415 final score ()

Predictors: hours of study per week (), number of previous math/stat classes (), number of cups of coffee before the exam (), final percentage of stat/math 414 (), etc.

Goal: predict a 415 final score based on given predictor values. For example, what would be the expected 415 final score when

- hours of study per week = 3, number of previous math/stat classes = 5, number of cups of coffee before the exam =2, final percentage of stat/math 414 = 89%, or

- hours of study per week = 5, number of previous math/stat classes = 2, number of cups of coffee before the exam =5, final percentage of stat/math 414 = 72%?

Suppose

where is a random error (). The goal is to learn from data. Once we know what is, we can predict the expected value of given different predictor values . In other words,

For example, the expected final score when hours of study per week = 3, number of previous math/stat classes = 5, number of cups of coffee before the exam =2, final percentage of stat/math 414 = 89% is .

A linear model assumes that is linear. In other words,

Then the goal is to learn from data. When (one explanatory model), a linear model is called as a simple linear regression model. Otherwise, the model is called multiple linear regression. In this class, we will mostly focus on simple linear regression.

The method of least squares

Suppose in the previous example only hours of study per week () matter in predicting the final score (). That is . Furthermore, the data suggest that the relationship between and is linear. We let be linear and assume , i.e.,

Final Score () = + Hours of study per week () + error, .

Now the question is to find the best and based on the data , , ..., where is a measurement of at .

Remark: depending on the nature of the data, we may treat an independent variable either to be random or deterministic. For example, we may consider be independent and identically distributed (i.i.d.) random pair of random variables (a.k.a random design), or we may regard be as fixed numbers (a.k.a fixed design). In the fixed design case, are independent but not identically distributed, since each has different mean value which depends on .

In regression analysis, we are interested in estimating the expectation of when . In the case of fixed design, estimating the regression line is equivalent to the expectation of . On the other hand, in random design, the regression line is the conditional expectation of given .

In this class, we will always assume deterministic predictors (fixed design), and to emphasize this fact, we will use lower-case letters to denote predictors.

Suppose each has the following representation:

where and each is a fixed number. Note this implies that . Given , how can we find ?

The basic idea is to choose a function of the predictors, , so that the sum of squared distances from the data to the function values is minimized. Since is linear, we find such that is minimized.

Let . Then the minimizer of are called the least square estimates of and . The corresponding statistics (i.e., replace observed with random ) are called least square estimators (LSE).

Theorem (LSE of and in simple linear regression) The least square estimators of are

Remark: It is important to note that both and are linear functions of the .

With some algebra, we can show

where , .

Proof. Following the standard procedures for minimizing a function, we find the partial derivatives

Setting both to zero we get

From these, we solve for and , and treat them as estimates (i.e., putting on hats).

Remark: the same least squares method can be applied when we have more than one predictor variable. Namely, when , the least square estimates of are

Theorem (MLE of , and in simple linear regression) The maximum likelihood estimates of and are the same as least squares estimates. The maximum likelihood estimator of is given by

Theorem (Distribution of LSE of and ) Under the simple linear model (1) with Normally distributed errors ,

,

Regarding optimality of Least squared estimators

- Least squared estimators and are unbiased for and . In fact, under the linear model (1) and normally distributed , and are minimum variance unbiased estimators (MVUEs).

- Note that without the normality assumption of error terms, the least squared estimators and are still unbiased. In what sense the least squared estimators and are optimal beyond being unbiased? If the distribution of an error term is unknown but has the constant variance (i.e., for all ), the least squared estimators and can be proved to be the Best Linear Unbiased Estimators (BLUEs) for and (Gauss-Markov Theorem). That is, the least square estimators are the estimators with the smallest variance among all unbiased estimators that are linear functions of the ’s.

Confidence intervals and hypothesis testing related to regression models

Recall the distribution of LSE and under the linear model with :

,

Thus, the standardized regression coefficients are random variables, i.e.,

Unfortunately, is unknown. Therefore, we replace with a sample variance estimator defined as

We note that the distribution of the residual sum of squares divided by is , i.e.,

In particular, is an unbiased estimator of .

Moreover, we can show that , and are independent.

Replacing with in (2), we have,

since,

These results allow us to construct confidence intervals for and , and to carry out hypothesis tests using t-tests.

Confidence intervals for the regression parameters

Using

as pivotal quantities,

a (two-sided) confidence interval for is

a (two-sided) confidence interval for is

A confidence interval for the average response value at

Often we are interested in estimating the average response value at a particular , i.e., estimate . Let . We want to construct a confidence interval for .

The estimator of the expected value of at is .

The distribution of the estimator of is

Standardization gives

and by replacing unknown with , we obtain the following pivotal quantity:

Therefore, we can construct a (two-sided) confidence interval for as

A prediction interval for a new response value at

We are sometimes interested in predicting new value of given . Where can we expect to see the next data point sampled? Note that even though we know the true regression line, we cannot perfectly predict the new value of because of the existence of a random error. In particular, when ,

where .

Suppose we know the true regression line and also . Since , we can predict that % of the times, the next value will be within .

Of course are unknown, and we have to estimate these unknown quantities. The variation in the prediction of a new response depends on two components:

- the variation due to estimating the mean with .

- the variation due to new random error .

Adding the two variance components, we obtain

A prediction interval for is

If you sample responses at many times, we would expect that next value to lie within this prediction interval in 95% of the samples.

Remark: comparing confidence intervals and prediction intervals

prediction intervals are always wider than confidence intervals

As we sample an increasing number of points , the width of a confidence interval for will go to

As we sample an increasing number of points , the width of a prediction interval for future draws will not go to

Hypothesis tests for the regression parameters

Suppose we are interested in testing whether the slope is the same as the hypothesized value or not (usually we are interested in testing whether the slope is zero or not, i.e., )

, vs. .

Test statistic

Under , .

Reject if the observed test statistic is in the rejection region. For example, when , reject if or .

The same steps are applied for testing about (), with the test statistic

Simulated example

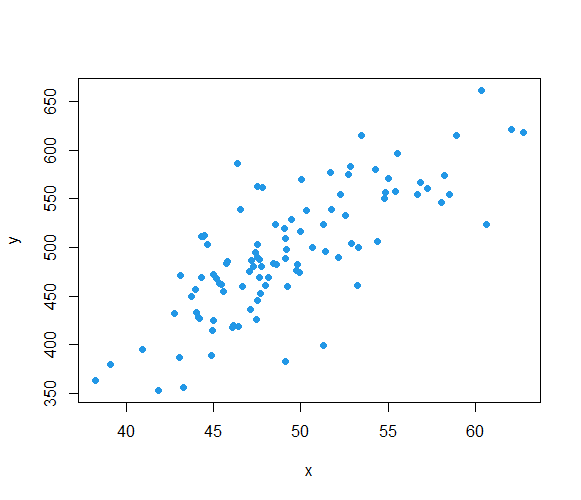

x# true parametersbeta0 <- 1beta1 <- 10sigma <- 40# generate a random sample of size nset.seed(1234)n <- 100x <- rnorm(n, 50, 5)y <- rnorm(n, beta0 + beta1 * x, sigma)plot(x, y, pch = 16, col = 4)

By hand

xxxxxxxxxx> cor(x, y)[1] 0.7659389> mean(x)[1] 49.21619> mean(y)[1] 494.8116> var(x)[1] 25.22075> var(y)[1] 4121.471

R output

xxxxxxxxxx# Fitting a linear modelmodel <- lm(y ~ x)# LM output> summary(model)Call:lm(formula = y ~ x)Residuals: Min 1Q Median 3Q Max -115.450 -24.560 0.094 23.458 119.509 Coefficients: Estimate Std. Error t value Pr(>|t|) (Intercept) 12.9201 41.0697 0.315 0.754 x 9.7913 0.8302 11.794 <2e-16 ***---Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1Residual standard error: 41.48 on 98 degrees of freedomMultiple R-squared: 0.5867, Adjusted R-squared: 0.5824 F-statistic: 139.1 on 1 and 98 DF, p-value: < 2.2e-16# Confidence intervals> confint(model,level = .95) 2.5 % 97.5 %(Intercept) -68.581325 94.42161x 8.143801 11.43884# 95% confidence interval when x = 50> predict(model,newdata = data.frame(x=50),interval = "confidence",level = .95) fit lwr upr1 502.4862 494.1531 510.8192# 95% prediction interval when x = 50> predict(model,newdata = data.frame(x=50),interval = "prediction",level = .95) fit lwr upr1 502.4862 419.7414 585.231