Bayesian Estimation

Bayesian EstimationIntroduction to Bayesian EstimationBayes' TheoremChoice of a prior distributionPoint EstimationBayesian Credible Interval

Introduction to Bayesian Estimation

Bayes' Theorem

For two events and with positive probability,

Example

Suppose we have one fair coin, and one biased coin which lands Heads with probability . One of the coins is picked at random and flipped three times. It lands Heads all three times. Given this information, what is the probability that the coin which is picked is the fair one?

Suppose now we have many coins with different head probabilities. We have prior information that most of the coins are close to being unbiased. How can we incorporate such prior information in our inference for the parameter?

Idea: treat a parameter as a random variable. Make an inference for based on the distribution of given observed data (a.k.a. posterior distribution).

is a pmf/pdf of the prior distribution of

is the conditional pmf/pdf of given . This is the likelihood function of .

is the conditional pmf/pdf of given the observed data . This is the pmf/pdf of the posterior distribution of given data.

By Bayes' theorem,

Choice of a prior distribution

Based on prior information (e.g. previous studies)

Non-informative prior

Conjugate prior

- a prior distribution that leads to the same distribution for the posterior distribution

A useful trick for posterior distribution computations: note that a pmf/pdf is completely specified by the part of the pmf/pdf which involves an input variable. Thus we first find the form the pdf , they simply find a "constant" (with respect to , which is, of course, actually some function of ) such that the expression integrates to 1.

Example Albert becomes rich after inventing a new type of toothpaste and invests all his money in a random stock. Let be a random variable that represents the value of the stock today, and let be the long-term mean value of the stock. He found that the value of the stock today was $80. He thinks that this price may be a random realization around the long-term mean , and so he assumes that the conditional distribution of given is with a known constant (e.g., ). Also, based on the past prices of the stock, it seems reasonable to assume that the long-term mean value is a random variable following with a known constant (e.g., ). Find the posterior distribution of .

Point Estimation

Bayesian inferences on the parameters are solely based on the posterior distribution of . If we have to make a "best guess" on (point estimation), which value should we choose?

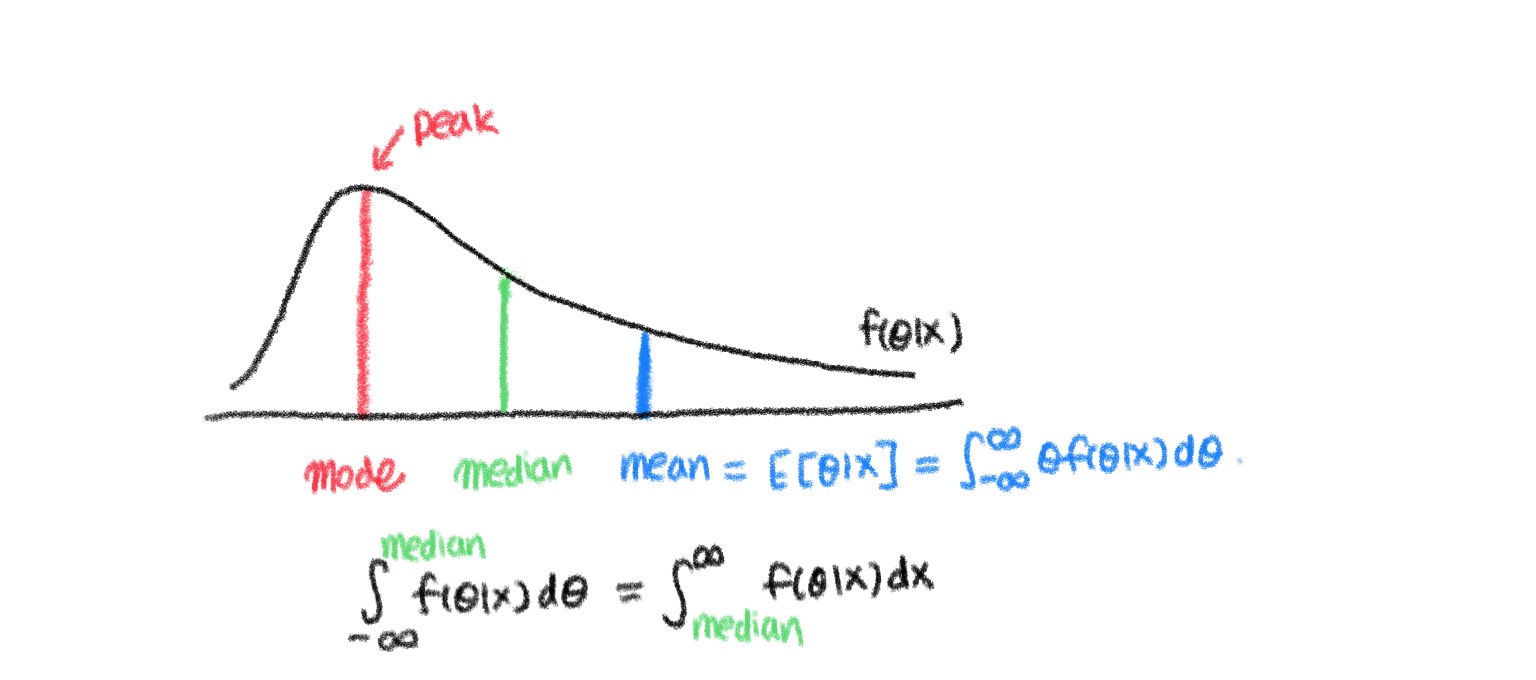

Three most common summary statistics for posterior distributions are

- Posterior mean: expected value of the parameter estimate using the posterior distribution.

- Posterior median: the value which divides the posterior distribution in half

- Posterior mode: the peak of the posterior distribution

Remark: the "best" guess would clearly depend upon the penalties for various errors created by incorrect guesses.

We use a "loss" function to measure the penalty of choosing as an estimate when is the true value of the parameter.

Examples:

- Squared error loss function:

- Absolute error loss function:

Definition (Bayes estimator) a Bayes estimator under a loss function is an estimator that minimizes the posterior expected value of the loss function.

- The Bayes estimator under the squared error loss is the posterior mean.

- The Bayes estimator under the absolute error loss is the posterior median.

Example: compute the Bayes estimator for the long-term mean in the previous example, when the loss function is the squared error loss.

Bayesian Credible Interval

A Bayesian counterpart to a confidence interval is a Bayesian credible interval.

Definition ( credible interval) a % Bayesian credible (or posterior) interval is defined to be any interval such that .

- One common choice for and is to let th quantile and th quantile of the posterior distribution.

Remark (Difference with confidence interval)

The probability that the true parameter is contained within a credible interval is %

% of the constructed confidence intervals are expected to contain the true parameter

- The probability that the true parameter is contained within a confidence interval is either zero or one.

Example: In the example of Albert’s stock investigation, find the 95% posterior interval for the long-term mean .